Teasing apart the representational spaces of ANN language models to discover key axes of model-to-brain alignment

Abstract

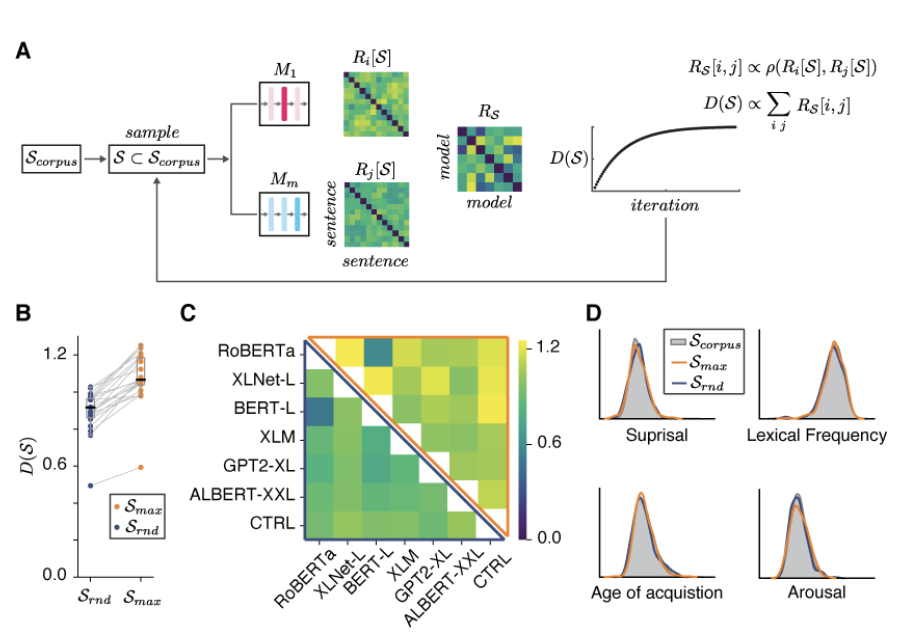

A central goal of neuroscience is to uncover neural representations that underlie sensorimotor and cognitive processes. Artificial neural networks (ANN) can provide hypotheses about the nature of neural representations. However, in the domain of language, multiple ANN models provide a good match to human neural responses. To dissociate these models, we devised an optimization procedure to select stimuli for which model representations are maximally distinct. Surprisingly, we found that all models struggle to predict brain responses (fMRI) to such stimuli. We further a) confirmed that these sentences are not outliers in terms of linguistic properties and that neural responses to these sentences are as reliable as to random sentences, and b) replicated this finding in another, previously collected, dataset. Stimuli for which model representations differ can be used to uncover dimensions of ANN-to-brain alignment, and serve to build more brain-like computational models of language.