Noga Zaslavsky

Assistant Professor

NYU Psychology

About me

I’m an Assistant Professor in the Psychology Department at NYU.

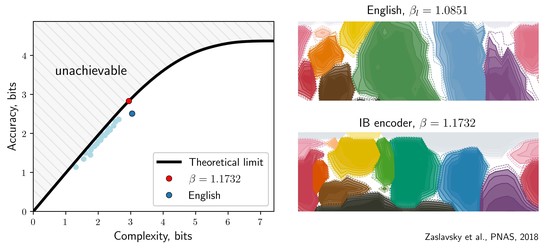

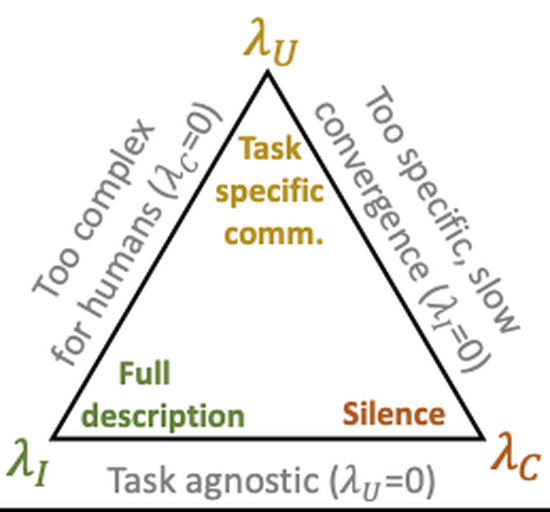

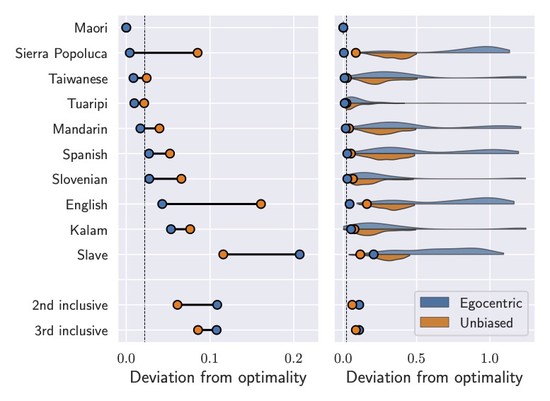

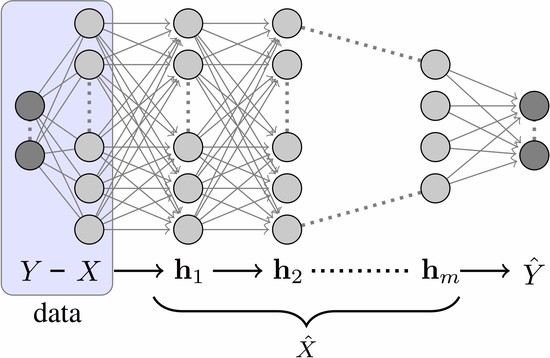

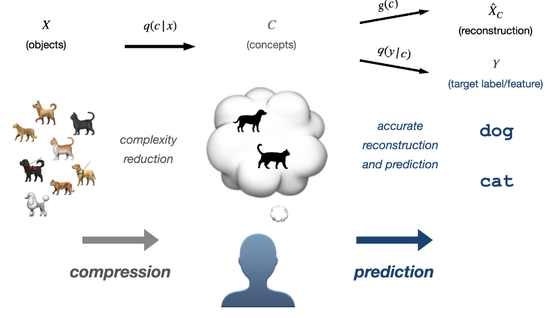

My research aims to understand language, learning, and reasoning from first principles, building on ideas and methods from machine learning and information theory. I’m particularly interested in finding computational principles that explain how we use language to represent the environment; how this representation can be learned in humans and in artificial neural networks; how it interacts with other cognitive functions, such as perception, action, social reasoning, and decision making; and how it evolves over time and adapts to changing environments and social needs. I believe that such principles could advance our understanding of human and artificial cognition, as well as guide the development of artificial agents that can evolve on their own human-like communication systems without requiring huge amounts of human-generated training data.